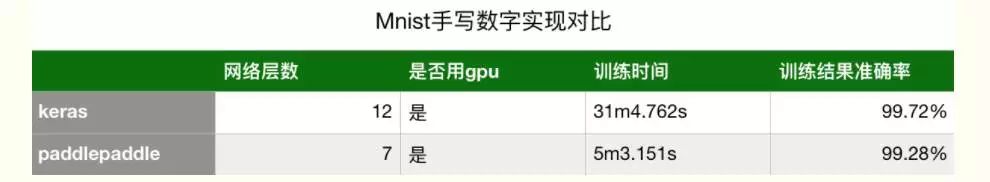

Last week, when searching for information about the deep learning distributed operation mode, I accidentally searched the paddlepaddle and found that the distributed training program of this framework is quite good. I want to share it with you. However, this piece of content is too complicated, so simply introduce the first "hello word" program of paddlepaddle---mnist handwritten digit recognition. Next time I will introduce the scheme of distributed training with PaddlePaddle. In fact, I also wrote an article using CNN to recognize handwritten digits. It was implemented with keras. After using paddlepaddle this time, I can simply compare the pros and cons of the two frameworks. What is PaddlePaddle? PaddlePaddle is a deep learning framework launched by Baidu. Most people probably use tensorflow, caffe, mxnet, etc., but PaddlePaddle is also a very good framework (it was said to be Paddle, now renamed PaddlePaddle, no I know that I feel that there is something inexplicable. What can PaddlePaddle do? Traditional basics can be done, especially for NLP, such as sentiment analysis, word embedding, language models, etc. Anyway, you can use it to try it out. PaddlePaddle installation Had to spit on the installation of PaddlePaddle, the official website said that "PaddlePaddle's only officially supported way of running is the Docker container", and docker is not particularly popular in China. All the frameworks encountered before have many kinds. Installation method is very convenient, so this only support docker makes people feel very surprised ==! However, I tried it by chance, but I can use pip install, but I didn’t write it on the official website. So, for the novice, the easiest way to install is: CPU version installation Pip install paddlepaddle GPU version installation Pip install paddlepaddle-gpu Handwritten digit recognition with PaddlePaddle Training step The traditional way is not to talk about this time, in order to compare us or use CNN to train. PaddlePaddle training a model complete process can be as follows: Import Data---->Define Network Structure---->Training Model---->Save Model---->Test Results Below, I use the code to show the training process directly (after the code will be placed in github): #coding:utf-8 import os from PIL import Image import numpy as np import paddle.v2 as paddle # Set whether to use gpu, 0 is no, 1 is with_gpu = os.getenv('WITH_GPU', '0') ! = '1' # Define the network structure def convolutional_neural_network_org(img): # first layer convolutional layer conv_pool_1 = paddle.networks.simple_img_conv_pool( input=img, filter_size=5, num_filters=20, num_channel=1, pool_size=2, pool_stride =2, act=paddle.activation.Relu()) # The second layer of convolutional layer conv_pool_2 = paddle.networks.simple_img_conv_pool( input=conv_pool_1, filter_size=5, num_filters=50, num_channel=20, pool_size=2, pool_stride= 2, act=paddle.activation.Relu()) # Full connection layer predict = paddle.layer.fc( input=conv_pool_2, size=10, act=paddle.activation.Softmax()) return predict def main(): # Initialize the device that defines the running model paddle.init(use_gpu=with_gpu, trainer_count=1) # Read the data images = paddle.layer.data( name='pixel', type=paddle.data_type.de Nse_vector(784)) label = paddle.layer.data( name='label', type=paddle.data_type.integer_value(10)) # Call the previously defined network structure predict = convolutional_neural_network_org(images) # Define the loss function cost = paddle .layer.classification_cost(input=predict, label=label) # Specify the training-related parameters parameters = paddle.parameters.create(cost) # Define the training method optimizer = paddle.optimizer.Momentum( learning_rate=0.1 / 128.0, momentum=0.9 , regularization=paddle.optimizer.L2Regularization(rate=0.0005 * 128)) # training model trainer = paddle.trainer.SGD( cost=cost, parameters=parameters, update_equation=optimizer) lists = [] # define event_handler, output training process The result in def event_handler(event): if isinstance(event, paddle.event.EndIteration): if event.batch_id % 100 == 0: print "Pass %d, Batch %d, Cost %f, %s" % ( Event.pass_id, event.batch_id, event.cost, event.metrics) if isinstance(event, paddle.e vent.EndPass): # save parameter with open('params_pass_%d.tar' % event.pass_id, 'w') as f: parameters.to_tar(f) result = trainer.test(reader=paddle.batch( paddle. Dataset.mnist.test(), batch_size=128)) print "Test with Pass %d, Cost %f, %s" % ( event.pass_id, result.cost, result.metrics) lists.append((event.pass_id , result.cost, result.metrics['classification_error_evaluator'])) trainer.train( reader=paddle.batch( paddle.reader.shuffle(paddle.dataset.mnist.train(), buf_size=8192), batch_size=128) , event_handler=event_handler, num_passes=10) # Find the one with the least training error best = sorted(lists, key=lambda list: float(list[1]))[0] print 'Best pass is %s, testing Avgcost is %s' % (best[0], best[1]) print 'The classification accuracy is %.2f%%' % (100 - float(best[2]) * 100) # Load data def load_image(file): Im = Image.open(file).convert('L') im = im.resize((28, 28), Imag e.ANTIALIAS) im = np.array(im).astype(np.float32).flatten() im = im / 255.0 return im # test result test_data = [] cur_dir = os.path.dirname(os.path.realpath (__file__)) test_data.append((load_image(cur_dir + '/image/infer_3.png'), )) probs = paddle.infer( output_layer=predict, parameters=parameters, input=test_data) lab = np.argsort(- Probs) # probs and lab are the results of one batch data print "Label of image/infer_3.png is: %d" % lab[0][0] if __name__ == '__main__': main() The above code looks very long, but the structure is still very clear. Let's test it with actual data, and see how the effect is going on~ BaseLine version First of all, I used the example given by the official website to train directly with the most basic CNN network structure. The code is as follows: Def convolutional_neural_network_org(img): #第一层体积层conv_pool_1 = paddle.networks.simple_img_conv_pool( input=img, filter_size=5, num_filters=20, num_channel=1, pool_size=2, pool_stride=2, act=paddle.activation.Relu()) # The second layer of convolutional layer conv_pool_2 = paddle.networks.simple_img_conv_pool( input=conv_pool_1, filter_size=5, num_filters=50, num_channel=20, pool_size=2, pool_stride=2, act=paddle.activation.Relu()) # 全Connection layer predict = paddle.layer.fc( input=conv_pool_2, size=10, act=paddle.activation.Softmax()) return predict The output is as follows: I1023 13:45:46.519075 34144 Util.cpp:166] commandline: --use_gpu=True --trainer_count=1 [INFO 2017-10-23 13:45:52,667 layers.py:2539] output for __conv_pool_0___conv: c = 20 , h = 24, w = 24, size = 11520 [INFO 2017-10-23 13:45:52,667 layers.py:2667] output for __conv_pool_0___pool: c = 20, h = 12, w = 12, size = 2880 [ INFO 2017-10-23 13:45:52,668 layers.py:2539] output for __conv_pool_1___conv: c = 50, h = 8, w = 8, size = 3200 [INFO 2017-10-23 13:45:52,669 layers. Py:2667] output for __conv_pool_1___pool: c = 50, h = 4, w = 4, size = 800 I1023 13:45:52.675750 34144 GradientMachine.cpp:85] Initing parameters.. I1023 13:45:52.686153 34144 GradientMachine.cpp :92] Init parameters done. Pass 0, Batch 0, Cost 3.048408, {'classification_error_evaluator': 0.890625} Pass 0, Batch 100, Cost 0.188828, {'classification_error_evaluator': 0.0546875} Pass 0, Batch 200, Cost 0.075183, {' Classification_error_evaluator': 0.015625} Pass 0, Batch 300, Cost 0.070798, {'classification_error_evaluator': 0.0 15625} Pass 0, Batch 400, Cost 0.079673, {'classification_error_evaluator': 0.046875} Test with Pass 0, Cost 0.074587, {'classification_error_evaluator': 0.023800000548362732} ``` ``` ``` Pass 4, Batch 0, Cost 0.032454 , {'classification_error_evaluator': 0.015625} Pass 4, Batch 100, Cost 0.021028, {'classification_error_evaluator': 0.0078125} Pass 4, Batch 200, Cost 0.020458, {'classification_error_evaluator': 0.0} Pass 4, Batch 300, Cost 0.046728, { 'classification_error_evaluator': 0.015625} Pass 4, Batch 400, Cost 0.030264, {'classification_error_evaluator': 0.015625} Test with Pass 4, Cost 0.035841, {'classification_error_evaluator': 0.01209999993443489} Best pass is 4, testing Avgcost is 0.0358410408473 The classification accuracy is 98.79% Label of image/infer_3.png is: 3 real 0m31.565s user 0m20.996s sys 0m15.891s Can see, the first line of output selected device is gpu, here I choose gpu, so equal to 1, if it is cpu, it is 0. The next four lines output the network structure, and then start to output the training results, the training is over, we output the result of the smallest error in these iterations, 98.79%, the effect is still very good, after all, only iterated 5 times. Finally look at the output time, very fast, about 31 seconds. However, I am not particularly satisfied with this result, because the network model training adjusted with keras can achieve 99.72% accuracy, but the speed is very slow. It takes about 30 minutes to iterate 69 times, so I think this network structure I can still improve it, so I have improved on this network structure. Please see the improved version. Improved version Def convolutional_neural_network(img): # first layer convolutional layer conv_pool_1 = paddle.networks.simple_img_conv_pool( input=img, filter_size=5, num_filters=20, num_channel=1, pool_size=2, pool_stride=2, act=paddle.activation .Relu()) # Add a layer of dropout layer drop_1 = paddle.layer.dropout(input=conv_pool_1, dropout_rate=0.2) #Second layer convolution layer conv_pool_2 = paddle.networks.simple_img_conv_pool( input=drop_1, filter_size=5, Num_filters=50, num_channel=20, pool_size=2, pool_stride=2, act=paddle.activation.Relu()) # Add a layer of dropout layer drop_2 = paddle.layer.dropout(input=conv_pool_2, dropout_rate=0.5) # 全Connection layer fc1 = paddle.layer.fc(input=drop_2, size=10, act=paddle.activation.Linear()) bn = paddle.layer.batch_norm(input=fc1,act=paddle.activation.Relu(), Layer_attr=paddle.attr.Extra(drop_rate=0.2)) predict = paddle.layer.fc(input=bn, size=10, act=paddle.activation.Softmax()) return predict In the improved version we added some dropout layers to avoid overfitting. A dropout is added after the first layer of the convolution layer and the second layer of the convolution layer, and the threshold is set to 0.5. Changing the network structure is also very simple, and the model can be modified directly in the defined network structure function. This is actually quite similar to the definition of the network structure of keras, and the usability is very high. Let's take a look at the effect: I1023 14:01:51.653827 34244 Util.cpp:166] commandline: --use_gpu=True --trainer_count=1 [INFO 2017-10-23 14:01:57,830 layers.py:2539] output for __conv_pool_0___conv: c = 20 , h = 24, w = 24, size = 11520 [INFO 2017-10-23 14:01:57,831 layers.py:2667] output for __conv_pool_0___pool: c = 20, h = 12, w = 12, size = 2880 [ INFO 2017-10-23 14:01:57,832 layers.py:2539] output for __conv_pool_1___conv: c = 50, h = 8, w = 8, size = 3200 [INFO 2017-10-23 14:01:57,833 layers. Py:2667] output for __conv_pool_1___pool: c = 50, h = 4, w = 4, size = 800 I1023 14:01:57.842871 34244 GradientMachine.cpp:85] Initing parameters.. I1023 14:01:57.854014 34244 GradientMachine.cpp :92] Init parameters done. Pass 0, Batch 0, Cost 2.536199, {'classification_error_evaluator': 0.875} Pass 0, Batch 100, Cost 1.668236, {'classification_error_evaluator': 0.515625} Pass 0, Batch 200, Cost 1.024846, {' Classification_error_evaluator': 0.375} Pass 0, Batch 300, Cost 1.086315, {'classification_error_evaluator': 0.46875} P Ass 0, Batch 400, Cost 0.767804, {'classification_error_evaluator': 0.25} Pass 0, Batch 500, Cost 0.545784, {'classification_error_evaluator': 0.1875} Pass 0, Batch 600, Cost 0.731662, {'classification_error_evaluator': 0.328125} `` ` ``` ``` Pass 49, Batch 0, Cost 0.415184, {'classification_error_evaluator': 0.09375} Pass 49, Batch 100, Cost 0.067616, {'classification_error_evaluator': 0.0} Pass 49, Batch 200, Cost 0.161415, {' Classification_error_evaluator': 0.046875} Pass 49, Batch 300, Cost 0.202667, {'classification_error_evaluator': 0.046875} Pass 49, Batch 400, Cost 0.336043, {'classification_error_evaluator': 0.140625} Pass 49, Batch 500, Cost 0.290948, {'classification_error_evaluator' : 0.125} Pass 49, Batch 600, Cost 0.223433, {'classification_error_evaluator': 0.109375} Pass 49, Batch 700, Cost 0.217345, {'classification_error_evaluator': 0.0625} Pass 49, Batch 800, Cost 0.163140, {'classification_error_evaluator': 0.046875 } Pass 49, Batch 900, Cost 0.203645, {'classification _error_evaluator': 0.078125} Test with Pass 49, Cost 0.033639, {'classification_error_evaluator': 0.008100000210106373} Best pass is 48, testing Avgcost is 0.0313018567383 The classification accuracy is 99.28% Label of image/infer_3.png is: 3 real 5m3.151s user 4m0.052s sys 1m8.084s From the above data, this effect is still very good, compared with the effect of keras training before, the results are as follows: It can be seen that this speed difference is very large. In the case of similar accuracy, the training time is almost six times shorter than the original, and the network structure is relatively simple, indicating that the parameters that need to be adjusted are also much less. to sum up Paddlepaddle is still very convenient to use, whether it is to define the network structure or training speed, it is worth mentioning, but in my personal experience, I think the most worth mentioning are: 1. It is convenient to import data. The amount of handwritten digit recognition data for this training is relatively small, but if you want to add data, it is very convenient and can be added directly to the corresponding directory. 2. The event_handler mechanism can customize the output of the training results. Previously used keras, and mxnet are all encapsulated functions, the output information is the same, here paddlepaddle this function is not completely encapsulated, but let our user customize the output of the content, we can reduce the redundancy The information, adding some of the output of the model training details, can also use the corresponding function to draw a picture of the model convergence, visualize the convergence curve. 3. Fast speed. The above example has proved the speed of paddlepaddle, and while improving the speed, the model accuracy is similar to the optimal result, which is a great advantage for us to train the model of massive data! However, there are some points in paddlepaddle that make me feel a little uncomfortable. For example, the documentation is too small. I have reported the results on the Internet and I have no results. But I think this should not be a big problem. After using more people, it will definitely be relevant. There will be more information. So I have been very confused, why is it not good for paddlepaddle? Installation is a bit of a spit point, but in fact it is still an excellent open source software, especially the most worthy of the distributed training method, multi-machine multi-card design is very good, this article did not talk about, how to use next time Paddlepaddle is a stand-alone single card, single-machine multi-card, multi-machine single card and multi-machine multi-card training to train the model, we use it a lot~~ can communicate more~ Ps: Because the documentation of paddlepaddle is too small, the article theory of the official website is more introduced. Most of the blog posts on the Internet are a few classic examples, so I plan to write a series, related to actual combat, no longer only depth. Learn the "hello world" program, this time use "hello world" as a primer, the next part begins to write some dry goods haha~

USB Flash Drives Compatible iPhone/iOS/Apple/iPad/Android & PC 128GB [3-in-1] Lightning OTG Jump Drive 3.0 USB Memory Stick

1. 3-in-1 OTG USB flash drive for PC, iPhone, Android, Type C

Iphone Ios Usb Flash Disk,Portable 2 In 1 , 3 in 1 Usb Pendrive,Otg Usb Flash Drives,Portable Otg Usb Flash Disk MICROBITS TECHNOLOGY LIMITED , https://www.hkmicrobits.com

2. USB 3.0 + Android + IOS interface;

3. Capacity from 16~128GB;

4. Auto-run Function is optional;

5. Bootable Function;

6. Built-in Password Protection;

7. High speed Performance;

8. Data transfer rate for Read is from 12MB/s to 25MB/s, for Write is 4MB/s to 14MB/s in Dual-channel mode;

9. Data transfer rate for Read is from 8MB/s to 15MB/s, for Write is 2MB/s to 8MB/s in Single-channel mode;

(The rate of performance depends on the different operation system available and various flash adopted).

10. Operation Systems supported: No driver needed in Windows ME, Windows 2000, Windows XP, Mac 9.x or later, Linux Kernel 2.4 or later. Only Windows 98 and Windows 98SE need the enclosed driver;

11. 10 years data retention;

12. More than 1,000,000 times data encryption;

13. Built-in Password Protection is optional (default setting: NO password function);

14. Auto-run Function is optional (default setting: NO auto-run function);

15. Bootable Function is optional (default setting: NO bootable function);

16. ReadyBoost Function under Windows Vista system is optional (default setting: NO readyboost function).