10 deep learning methods that artificial intelligence practitioners have to know

The rise of artificial intelligence is sweeping across the globe, and its influence is expanding rapidly. Terms like deep learning and machine learning are now part of everyday conversation. Many entrepreneurs are eager to enter the AI industry, with deep learning being one of the most critical areas to master.

Recently, software engineer James Le published an article titled "The 10 Deep Learning Methods AI Practitioners Need to Apply" on Medium. In this piece, he highlights ten essential techniques used in convolutional neural networks, recurrent neural networks, and other key architectures. The article offers a comprehensive overview of the core concepts and practical applications of these methods. You can find the original post at the end of this text.

Over the past decade, interest in machine learning has remained strong. It's common to encounter discussions about it in computer science courses, industry conferences, and even mainstream media like the Wall Street Journal. However, many people still confuse what machine learning can actually do versus what they hope it can achieve. Fundamentally, machine learning involves using algorithms to extract patterns from raw data and build models that can make predictions or decisions based on new inputs.

Neural networks, a type of machine learning model, have been around for over 50 years. They consist of nodes that perform nonlinear transformations, inspired by biological neurons in the mammalian brain. These connections between neurons evolve through training, allowing the network to learn and improve over time.

In the mid-1980s and early 1990s, significant progress was made in neural network architectures. However, the computational demands and limited data available at the time hindered their widespread adoption. Interest declined until the early 2000s, when computing power exploded, leading to a "Cambrian explosion" in technology. During this period, deep learning emerged as a powerful force, achieving remarkable results in various machine learning competitions. By 2017, deep learning had reached its peak, becoming a central component in almost every machine learning application.

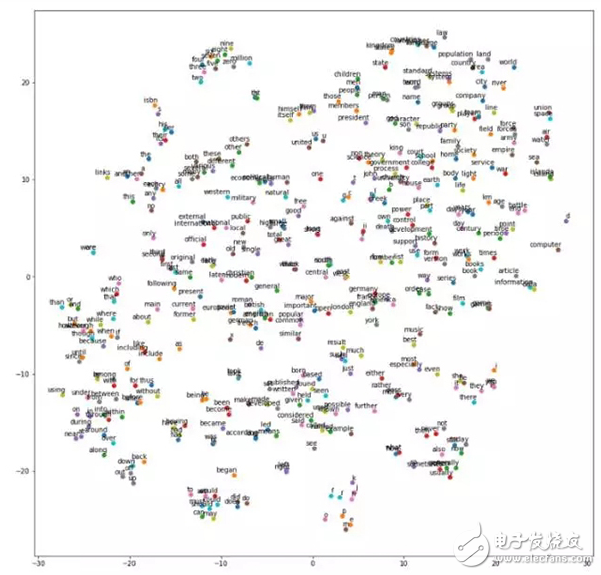

Below is a t-SNE projection of word vectors clustered by similarity:

As I've started diving into academic papers, I’ve come across several influential works that shaped the field. Some of them include:

- "Gradient-Based Learning Applied to Document Recognition" (1998) by New York University: [Link](http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf)

- "Deep Boltzmann Machines" (2009) by the University of Toronto: [Link](http://proceedings.mlr.press/v5/salakhutdinov09a/)

- "Building High-Level Features Using Large-Scale Unsupervised Learning" (2012) by Stanford and Google: [Link](http://icml.cc/2012/papers/73.pdf)

- "DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition" (2013) by Berkeley: [Link](http://proceedings.mlr.press/v32/donahue14.pdf)

- "Playing Atari with Deep Reinforcement Learning" (2016) by DeepMind: [Link](https://vmnih.github.io/dqn.pdf)

After researching and studying these topics, I want to share 10 powerful deep learning methods that engineers can use to solve real-world problems. Before we dive in, let’s define what deep learning really means.

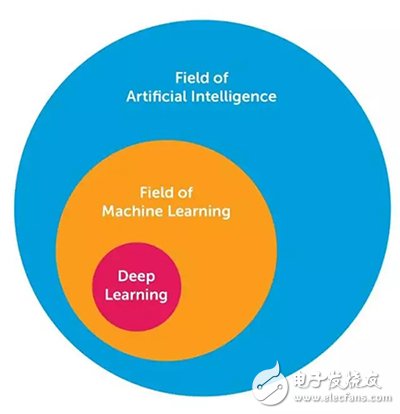

Deep learning is a subfield of machine learning that involves neural networks with multiple layers. These networks are designed to automatically extract features from raw data, making them highly effective for complex tasks such as image recognition, natural language processing, and more.

The following diagram illustrates the relationship between artificial intelligence, machine learning, and deep learning:

Deep learning differs from traditional neural networks in several ways:

- More neurons

- More complex connections between layers

- Greater training capacity due to increased computational power

- Automatic feature extraction

Now, let's explore the top 10 deep learning methods that practitioners should know.

**1. Backpropagation**

Backpropagation is a method for calculating gradients in neural networks. It enables the optimization of parameters by propagating errors backward through the network. This technique is fundamental to training deep neural networks.

**2. Stochastic Gradient Descent**

Stochastic gradient descent is an optimization algorithm used to minimize the loss function in machine learning. It updates model parameters based on the gradient of the loss with respect to a single example, rather than the entire dataset.

**3. Learning Rate Decay**

Adjusting the learning rate during training helps stabilize the learning process and improve convergence. Common strategies include reducing the learning rate over time or at specific intervals.

**4. Dropout**

Dropout is a regularization technique that randomly deactivates neurons during training to prevent overfitting. It encourages the network to learn more robust features by forcing it to rely on different subsets of neurons.

**5. Max Pooling**

Max pooling is a downsampling technique used to reduce the spatial dimensions of feature maps. It extracts the maximum value from each region, helping to capture the most important features while reducing computational load.

**6. Batch Normalization**

Batch normalization is a technique that normalizes the input of each layer in a neural network. It helps accelerate training, reduces the sensitivity to initial weights, and improves overall performance.

**7. Long Short-Term Memory (LSTM)**

LSTMs are a type of recurrent neural network that can remember long-term dependencies. They use gates to control the flow of information, making them ideal for sequence modeling tasks such as speech recognition and machine translation.

**8. Skip-Gram**

Skip-gram is a word embedding model that predicts surrounding words given a target word. It captures semantic relationships between words, making it useful for tasks like text classification and sentiment analysis.

**9. Continuous Bag-of-Words (CBOW)**

CBOW is another word embedding model that predicts a target word based on its context. It averages the embeddings of surrounding words to generate a representation of the target word.

**10. Transfer Learning**

Transfer learning involves using a pre-trained model on a large dataset and fine-tuning it for a specific task. This approach significantly reduces training time and data requirements, making it highly efficient for real-world applications.

Deep learning is a rapidly evolving field, and while many techniques lack formal theoretical foundations, their effectiveness is often validated through experimentation. Understanding these methods from the ground up will help you apply them more effectively in your own projects.

TC-2C Series (7"-55")

Our 2C series of open touch panel computers provide perfect solutions for customers around the world who need reliable Touch Display products. 2C has excellent image clarity and light transmission, and can achieve stable, drift-free operation and precise touch response. Supporting touch technology, product size, processor (Inter Core I3, I5, I7, J1900, RK3566, RK3288), brightness and other customized services to meet the various needs of users. 2C all-in-one machines are mainly used in industrial control, industrial display, vending machines, self-service inquiry machines and other scenarios. We provide customers with industrial touch panel computers, inquiry machines, capacitive touch all-in-one machines, infrared touch all-in-one machines, resistive touch all-in-one machines and other options. Please contact us for consultation.

industrial touch screen,industrial touchscreen monitor,industrial touchscreen PC

HuiZhou GreenTouch Technology Co.,Ltd , https://www.bbstouch.com