10 deep learning methods that artificial intelligence practitioners have to know

The rise of artificial intelligence is reshaping industries worldwide, with deep learning and machine learning becoming central to technological advancement. Entrepreneurs and researchers are increasingly drawn to the AI sector, recognizing deep learning as a crucial domain for innovation. Recently, software engineer James Le shared an insightful article titled *"The 10 Deep Learning Methods AI Practitioners Need to Apply"* on Medium. In this piece, he outlines ten essential techniques, covering convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more. The article is a valuable resource for anyone looking to understand and apply deep learning in real-world scenarios.

Over the past decade, interest in machine learning has remained strong. It's now a common topic in computer science courses, industry conferences, and even mainstream media like the Wall Street Journal. However, there's often confusion about what machine learning can actually do versus what people hope it will achieve. At its core, machine learning uses algorithms to extract patterns from raw data, building models that can make predictions or decisions on new, unseen data.

Neural networks, a key type of machine learning model, have been around for over 50 years. They are inspired by the structure of biological neurons in the mammalian brain, with connections between nodes that evolve through training. While early neural networks showed promise, their high computational demands limited practical applications until the 2000s, when computing power exploded. This "Cambrian explosion" in technology allowed deep learning to thrive, leading to breakthroughs in various machine learning competitions.

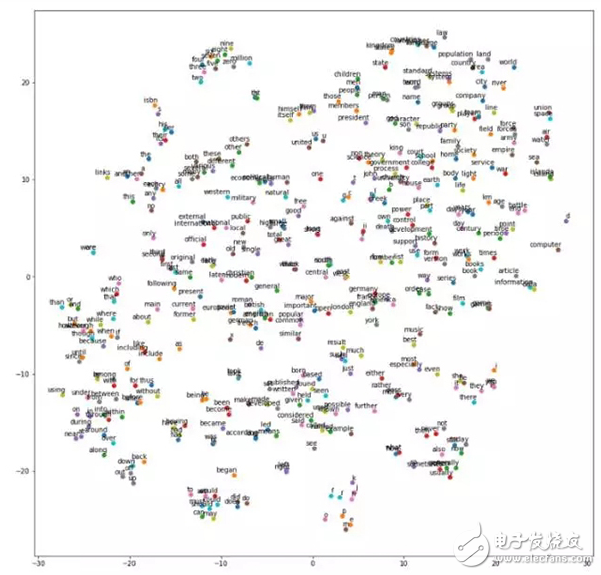

By 2017, deep learning had reached a peak in popularity, with applications spanning image recognition, natural language processing, and more. Below is a t-SNE projection showing word vectors clustered by similarity:

As I've been diving into academic research, I've come across several influential papers that shaped the field. For example, Yann LeCun’s *Gradient-Based Learning Applied to Document Recognition* (1998) introduced convolutional neural networks. Another key work, *Deep Boltzmann Machines* (2009) from the University of Toronto, proposed a novel approach to multi-layer learning. Stanford and Google's *Building High-Level Features Using Large-Scale Unsupervised Learning* (2012) tackled the challenge of creating high-level features from unlabeled data.

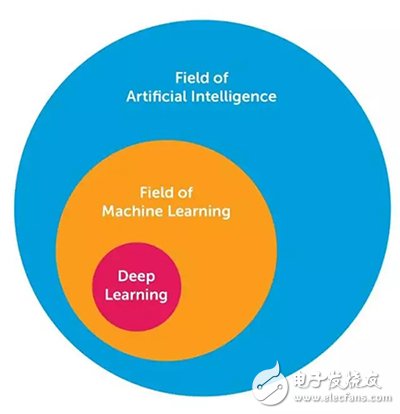

To better understand deep learning, I’ve also studied the relationship between artificial intelligence, machine learning, and deep learning. Here’s a visual representation:

Artificial intelligence is the broadest field, encompassing machine learning, which in turn includes deep learning. What sets deep learning apart from traditional neural networks are factors like more neurons, complex layer connections, and automatic feature extraction.

Now, let’s dive into the top 10 deep learning methods every practitioner should know:

**1. Backpropagation**

Backpropagation is a method for calculating gradients in neural networks. It allows us to update weights using gradient descent, making it a fundamental technique for training deep models.

**2. Stochastic Gradient Descent (SGD)**

This optimization algorithm updates model parameters based on the gradient of the loss function. It helps find the minimum of the loss function efficiently.

**3. Learning Rate Decay**

Adjusting the learning rate over time can improve model performance. Reducing the rate gradually helps fine-tune the model as training progresses.

**4. Dropout**

Dropout is a regularization technique that randomly deactivates neurons during training, preventing overfitting and improving generalization.

**5. Max Pooling**

Max pooling reduces the spatial dimensions of input data, helping to extract dominant features while reducing computational load.

**6. Batch Normalization**

This technique normalizes the inputs of each layer, accelerating training and improving model stability.

**7. Long Short-Term Memory (LSTM)**

LSTMs are a type of RNN that can remember long-term dependencies, making them ideal for sequence modeling tasks.

**8. Skip-Gram**

A popular model for learning word embeddings, Skip-Gram predicts surrounding words given a target word, capturing semantic relationships.

**9. Continuous Bag-of-Words (CBOW)**

CBOW predicts a target word based on its context, offering an efficient way to learn word representations.

**10. Transfer Learning**

Transfer learning involves applying knowledge from one task to another. By reusing pre-trained models, we can significantly reduce training time and data requirements.

In summary, deep learning is a powerful tool that continues to evolve. While many techniques lack detailed theoretical explanations, they have proven effective through experimentation. Understanding these methods from the ground up is essential for anyone serious about AI development.

Vertical advertising player,Floor-standing advertising all-in-one,Smart digital signage

HuiZhou GreenTouch Technology Co.,Ltd , https://www.bbstouch.com