How to build the basic process of SoC project

SoC basic simulation environment introduction

I wrote one on the forum. "How to build a basic Testbench for my SoC project (My Process)", here is a list of important and changed places.

Assume that this SoC has a CPU system, a memory controller, a bus topology, a PAD, a Clockreset, and some logic function modules.

1. Embedded software (firmware) in the simulation environment

This includes two parts, one is the initialization bootloader (usually solidified in the rom or stored in the external flash), the first is the application level program placed on the external volatile storage medium after booting up.

2. Use the Instruction Set Simulator (ISS) instead of CPU-IP.

There are open source and there are not many open source free ARM ISS ISS. Considering that ISS itself is not true, if it is not to verify the bootrom code, personally suggest to find an open source. ISS can be compiled into a .so library file, so you don't have to compile the entire set of ISS C code during simulation (you need to set LD_LIBRARY_PATH to tell the emulator where to find it; you need to tell the link library address and library name during the compilation process) ).

ISS needs a configuration file to tell it the address access space of the CPU, such as the program area, the stack area is the space managed by the ISS (assuming the memory space), the memory address like the DUT and the register space of the DUT are the space managed by the DUT (assumed to be called IO space), ISS should be able to see all the address space, and judge whether it is memory space or IO space according to the address to do different operations.

3. Shared Space When the CPU (ISS) and Testbench interact, you can specify an address space (such as 0x3000_0000 ~ 0x3100_0000), which is an array in the testbench. For example, to achieve random configuration of the register, because the ISS C program is not convenient to do the constraint random, you can write the randomly generated value of the constraint in the Testbench component to the shared space, and then let the ISS C read the shared space. The value is then assigned to the register.

4. Maintenance of the Define file

A definition of Testbench in the SoC project may be used in both the assembler, the embedded C program, the C model C program, and the Testbench SV code. Maintaining multiple similar define files at the same time is definitely error-prone, so only one is maintained, and the rest is automatically generated by the script.

5. Memory Controller Module (DDRC) replacement

DDRC is the most important BUS-Slaver in the system, usually requires initialization process (some ddrc is not needed), and usually requires DDR-PHY model, which affects the simulation speed. Moreover, when verifying the function module, it is not easy to simulate a scene with different bandwidth changes using DDRC. Therefore, you can consider using a BUS-Slaver BFM instead of DDRC for verification.

6. Implementation of printing There is no standard stdio in the embedded C language program of Testcase in SoC environment, so to achieve printf. printf is a function of "indeterminate number of parameters", using "parameters from right to left, the stack, the beginning The parameter is at the position closest to the top of the stack. The last character of the string is \0" to implement printf. On the C side, only the address of the first parameter (definitely not 0) needs to be passed to the specified location of the shared space, in SVTB. After obtaining the address, it is implemented according to % and parameter address (the implementation of SV is similar to the implementation of printf in C language). It should be noted that 1) the C-end program must ensure that the parameter address is written to the shared space in time, do not stop at Cache or transfer is too long; 2) The information to be printed using ISS exists in the memory space, so that the SV side can see the memory space. When using multi-core CPU-RTL, pay attention to the print control of different cores (you can allocate a shared space for each core, and perform different operations according to different core-ids in the print function).

The implementation of functions such as malloc can also be implemented using shared space.

7. Collaborative verification of multiple modules At the system level, it is often necessary to run a system-level case to simulate the scenario in which the entire system works together (generally this case is also applicable to power analysis). This kind of case may take a lot of effort in the construction of Testcase. If there is a hardware simulation accelerator, if it can only be done in a pure simulation environment, try to simplify it.

8. Single case process compile dut and testbenchà compile firmware à start simulation (load firmware at the right time and generate random control data to write to the shared space. If you want to load the firmware into the ddr model, and the DDRC initialization process will do data-training this Kind of operation to write data, then ensure that the initialized data is not washed away)

Module-level verification has advantages over system (subsystem) level simulation environments:

1) Simulation speed is fast

2) Good random controllability

3) It is easier to do Error-Injection

4) It is easier to switch and switch modes

Simulation tools (2010 and later) support both module-level and system-level coverage merging to accelerate convergence.

It should be noted that although the module-level environment can theoretically cover all system-level environments, it is likely to achieve 100% coverage with limited human and time resources. For example: The dataenable signal of the video synchronization is too varied, so that the random is less than what might happen in the actual system. In summary: The key is that the module-level environment may not cover the actual situation that may occur.

Module level environment infrastructure

In the module-level basic environment, in addition to the verification component (monitor driver scoreboard, etc.), there is also a bus connection Master and Slave, CPU-Model control DUT. Note: The DUT in the module-level environment mentioned here is a complete module, not Consider a submodule inside a module.

Assuming that the DUT is a Master device of the bus, a memory access operation is initiated. The CPU-Model is responsible for configuring the registers and some memory accesses. For the sake of simplicity, the module-level environment allows the CPU-Model to directly access the Bus-Slaver space without going through the bus.

Module-level environment Testcase reuse at the system level

In the case of limited personnel and time resources, it is necessary to ensure that module-level code is reused at the system level. In general, the reuse of the Testbench component (Driver Monitor Model Scoreboard, etc.) is relatively simple, and the troublesome thing is that the reuse of the testcase in the SoC system level (C program on the CPU) environment is troublesome. The Testcase build is determined by the implementation of the CPU-Model in the TB architecture. The following two main solutions I use are Testcase C.

Option One:

Implemented using ISS. It is consistent with the SoC basic simulation environment.

Option II:

Implemented using DPI. CPU-Model is a model of SV, which implements register access and memory address space access. The more complicated is the simulation of interrupt. You can use sv's fine-grain-process(process::self()) to implement main-task and irq-task, emulate that "the CPU hangs the main program after entering the interrupt, and returns to the main program after the execution of the interrupt" (main_task.suspend (); ....; the behavior of main_task.resume();). Use DPI to pass the SV-Task of the underlying hardware interface to the C program.

The DPI implementation of the program should pay attention to the code of the underlying hardware interface, as well as the code directly accessed in the C code (such as the operation of pointers to the DUT memory), especially the reference code provided by IP-Vendor is likely to have similar code. For example, the protocol stack of the software and hardware interaction of usb ethernet is placed in the memory, and the software code generally maintains a data structure, and then the pointer points to the data structure address operation. Such code in the dpi environment can not be used directly (the operation of the pointer will not work, you have to change to the hardware underlying code implementation). The firmware code for such a module may be off-the-shelf and the simulation should be reused as much as possible. A simple solution simply abandons the module-level environment, validates it in a system-level environment, or uses an iss. In the iss environment, the firmware code may also need to be modified. For example, the above data structure address should be assigned to the dut memory address (such as a large struct assignment operation, first malloc out a space, and then fill in the space. As long as Malloc to dut memory address can be).

Other programs:

Testcase does not use C directly with SV; or replaces CPU-Model with real CPU-IP with boot-rom and boot-ram.

Architecture assessment

Personal first push or hardware accelerator, it should be noted that the architecture evaluation is best to ensure the frequency proportional relationship (bus access frequency of the memory module, frequency of each component in the bus topology, memory controller and memory operating frequency), personal feeling may be Palladium and Veloce's hardware accelerator for this solution is more suitable.

If you use the simulation method to evaluate, you need to pay attention to:

1) The authenticity of the pattern. The off-the-shelf module RTL can reflect the true memory access behavior and latency tolerance, but the build environment is complex, the simulation speed is slow, and usually requires an initialization process to work. Personal suggestion to construct BFM simulation memory access behavior: BFM can eat configuration files to simulate realistic scenes.

2) Automatic integration of infrastructure. In a slightly more complicated SoC architecture, the integration of the bus topology can be complicated (uniform components are not very convenient to use the auto-integration function of emacs, and some special signal bit width matching is easy to make mistakes manually). Many years ago there were automated tools to implement this integration. However, commercial tools provide a lot of functions, but they may not directly meet the needs of individual projects. It is recommended to consider developing tools for automatic integration. The current low-powerflow eliminates the need to write extra code in the architecture-integrated RTL, simplifying the difficulty of automatic integration.

In the architecture evaluation environment, the performance-monitor of the memory controller and the bus architecture module is also needed to calculate the throughput, the memory controller efficiency, the latency of the function module access behavior, etc., according to the throughput, the architecture evaluates whether the environment is expected or not. The amount of data stored in the memory is relatively consistent. Under this premise, the efficiency of the memory controller is reached, and which module in the system will have an unreasonably large latency (causing the module to be designed to increase the fifo depth). Different simulations are performed by adjusting the priority strategy of the memory controller and bus topology module, the clock frequency, or increasing or decreasing the number of bus topology components and the number of Master/Slave ports.

Use of VIP

Usually we use vip for bfm to verify the complex standard interface protocol.

The advantage of vip is that there is no need to specially develop bfm, there are complete tb components, some documents have relatively complete test plans and examples, randomness and error-injection are complete. The downside is that the code is invisible, and it is easy to catch problems when it encounters problems; vip can be used as a tool to install OS and emulator; EDA vendor local support staff is insufficient, and usually VIP runs slower.

Bfm can be developed by itself if the capabilities and resources allow. A faster solution is the reliable rtl ip. For example, if we want to verify usbhost, then we can find a usb device or uotg rtl ip to do bfm. The advantages of this method: BFM code quality is high, debug visibility is high, and it can be better transplanted in hardware accelerator in the future (some Can not use phy, digital interface directly connected). Disadvantages: The amount of development code is not small, and some phy models may be a problem (such as some one-way data transmission, the master side is parallel data to LVDS, the slave side is LVDS to parallel data, such PHY model may need to be developed separately) , rtl usually requires an initialization process, randomness and error-injection are not friendly enough.

I think if the DUT is developed in-house, because the quality of the code may not be enough, it is more suitable to use the commercial vip. If the DUT is a silicon-verification IP, I think the BFM I developed is enough.

Coverage-Driven and Assertion-Based

Personally think that code coverage is the most important, it must be statistically and carefully checked.

Functional coverage (including coverage of assertion) should be a reflection of Testplan. I think it is just to provide statistics on the coverage of Testplan. Testplan itself may be incomplete, so 100% of the functional coverage does not mean that the verification is sufficient.

Assertion is suitable for some less complex protocol timing verification, but I feel that EDA companies have not invested much in assertion in recent years. I think that for a submodule inside a large module, assertion is very suitable, and can be described and verified specifically for the interface of the submodule.

Simulation level acceleration

The fastest acceleration technology is definitely the hardware simulation accelerator.

The CPU and DDRC replacement in the "Introduction to SoC Basic Simulation Environment" has the function of accelerating simulation. In addition to this there are some acceleration methods I am using:

1) Disable unnecessary modules in the system level simulation environment. ----- Requires two scripts, one is to automatically generate the dummy file, one is to automatically replace the dummy file with the original RTL.

2) Shorten the simulation time of the SoC power-on startup. ---- Usually a state machine implements state jumps based on several counter technologies. The method is to change the initial value of the counter or the value required for the jump. The RTL level is relatively easy to implement. It is troublesome to find the signal at the gate level simulation. It is best to communicate with the colleague of the flow in advance and keep the signal name.

3) Some IPs in DUTs consume simulation resources, so consider using a simplified model instead. Such as PLL, DCM and some PHY-Model.

4) The writing of some RTL codes may consume simulation resources. For example, on each rising edge of the clock, when a reset is valid, a larger two-dimensional array is attached with an initial value. This code is best to add ifdefelse endif to rewrite into Initial-block.

5) Reduce DPI too frequent interactions

6) Use techniques such as separate-compilepartition-compile with caution, which may have a negative effect.

Gate level simulation

There are generally several types of gate-level simulations in functional simulation:

1) Integrated network table simulation

2) DFT netlist simulation

3) Netlist simulation of anti-standard SDF after PR

4) FPGA synthesis netlist simulation

5) Simulation of Gtech netlist

After the integration, it is similar to the netlist after DFT. Generally, it can be used after running DFT. Except for the SDF after the PR, the other is the gate level simulation running 0delay. Usually do a few extra treatments:

1) Add the delay of clk2q to the sequential logic (dff, etc.) in the std-lib cell library file.

2) Ensure that the output of the SRAM model does not output X when it is not working.

3) If there is a dff without a reset end in the gate-level netlist, it is generally necessary to find out the $deposit process. (The method of finding can ask the colleague of the flow to take a corresponding version of the sdf from the corresponding netlist, and then the parser sdf file can be obtained. Dff in the instance).

4) Gate-level simulation of anti-standard SDF If checktiming, be careful to remove the timing check of the logic across the clock domain such as 2dff.

FPGA synthesis after network synthesis is generally not required, but when FPGA timing report, FPGA function simulation, CDC and Lint-check are no problem, it is worthwhile to do when it is suspected that FPGA synthesis has problems. I have found several problems in the simulation of netlist after doing FPGA synthesis: 1) Xilinx-V7 defaults the complex case statement in RTL with blockram, the result is a time difference when the implementation is 2) FPGA put some of the RTL The operation is directly implemented by the internal DSP, and as a result, the function of the DSP is integrated. The signal name of the FPGA integrated netlist is too messy. If the Testbench pulls some internal signals for observation or force, it is difficult to compile.

The simulation of Gtech netlist rarely needs to be done. If Gtech netlist is used to replace RTL on FPGA, it is necessary to pay attention to the behavior description of Gtech unit of FPGA version to ensure consistency with Asic, otherwise it is easy to have "pit".

The post-PR level simulation of PR is focused on the more accurate IR-Drop and Power data, as well as ensuring the completeness and correctness of timing constraints. It is recommended to run only typical cases.

Simulation verification automation

For example, some of the automated techniques of Testbench's automatic checking mechanism (many are automatically executed with crontab).

1) Automatically checkout a code every day to do mini-regression, and automatically send an email to the project team.

2) Automatically update to the new tag to do mini-regression and automatically send email every few hours after checking for new tags.

3) Automatically list all checkin codes every day and automatically send emails

4) Automatically summarize and resolve unresolved issues every day (bug-zilla, issue-tracker, Jira and other bug-tracking systems have their own functions, and some may not need to implement them themselves)

5) Automatic code backup (some code may still be in the development process, so I don't want to checkin into the code base. If MIS does not do automatic backup for such code, you may need an automatic backup program.)

6) In addition to the automatic comparison environment, there is also a parse compiler and emulation log program called during regression.

If there is no automatic check mechanism on a function, try to reduce the workload of manual comparison. For example, there is an image algorithm module c-code can not be found, but RTL is golden, running the actual graphics pattern a picture will take more time, you can package the image generated in the regression into a web page, Then use the browser to see.

The scripting language is a very important part of the simulation work. We generally use scripting languages ​​such as shell\perlcl\python. It is recommended to force yourself to encounter problems using the language you are learning during the learning of the scripting language.

Verify project management

Http://bbs.eetop.cn/thread-581216-1-1.html "Multimedia SoC project Verification Project Leader work content introduction (discussion)" I listed in this post is more detailed, VerificationEngineer work basically Is a subset of the Verification Project Leader.

The post is in accordance with the preparation before the start of the project à project start but did not provide the first version of the integration code à 0.5 version à 0.75 version à 0.9 version à 1.0 version à TO before the TO project development time to write. I am here to list a few things that are not mentioned in the post.

Leader should pay attention to the verification process. In addition to simulating and checking the FPGA timing before FPGA verification, static verification checks such as CDC and Lint should be done.

The Leader timely trains the new colleagues in the project team to train the basic skills of the work environment, avoiding the emergence of colleagues in the group because the work environment affects the work efficiency (for example, different tools have different requirements for the machine, some require a large cache, and some require a large memory. It may be necessary to consider factors such as cache\memory\cpu-core-num\cpu-frequency. If the MIS does not perform LSF computing resource partitioning according to machine performance, the LSF pre-configured allocation strategy may submit the task to the inappropriate one. On the machine.)

Take care to avoid the verification engineer's over-reliance on the module-level environment. For example, the FPGA reported a problem, and it was considered that there was no functional problem when it was reproduced at the module level.

The Leader must summarize all the missing bugs in the development process. The development phase is usually the late-stage module-level environment running simulation of the design engineer project and the bugs found on the FPGA. Some bugs may be caused by the FPGA leading to the omission before the simulation verification. It is indeed a special concern for the bugs that the verification engineer missed.

Generally, Power data is a VCD or Saif file derived from gate-level simulation. As the design becomes larger and larger, it may cause the VCD file to be too large. In this case, communicate with colleagues who run power analysis in time to see if there is any suitable means. solve. Some hardware accelerators can internally analyze which period of time to turn over sharply. You can dump vcd according to this information. Note that when the signal to be dumped is particularly large, the file size of the compressed format of dump vcd and dump fsdb is similar. (A lot of storage is used to build the table of signal names.) In this case, dump vcd can be used directly, and the PLI that introduces the dump waveform and the subsequent format conversion work can be avoided.

In theory, verification can't be done, and there is something wrong with it. The leader can't take things big, and some things have to be rolled out when resources are limited.

Timely summary of records and analysis

Projects that are highly reusable are prone to experience errors and are cautious. Change the place to strengthen the review.

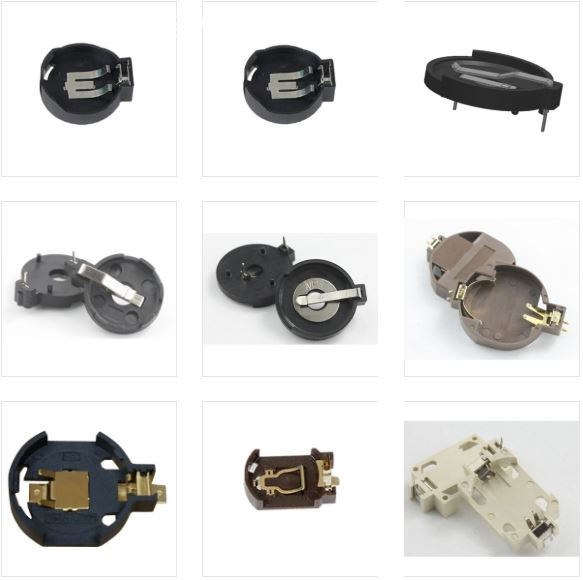

Antenk battery holders are manufactured from UL rated 94V-O materials. Contacts made of high quality spring steel to assure reliable connections and allow contact resistance. Each contact is clearly marked with its polarity to assure proper battery insertion.

Lightweight and rugged, these PCB coin cell holders offer uniquely designed notched battery slot that assures quick and easy insertion and replacement of all major battery manufacturers' lithium coin cells.

Coin Cell Battery Holders

We have created a wide variety of coin cell battery holders for use in all types of devices, and from handheld medical devices to server motherboards we have solutions for any application. The ever increasing types, sizes, and sheer number of devices which use coin cell battery holders have been keeping us busy, and we have a similarly increasing selection of coin cell battery holder designs. After all, the perfect coin cell battery holder for a handheld medical device is going to be vastly different from the one for a server motherboard.

Lithium Coin/button Cell-Holders Battery Holders

Compact and vertical holders allow battery insertion and replacement from the top, making them ideal for high-density packaging

compact and vertical holders allow battery insertion and replacement from the top, making them ideal for high-density packaging, which maximizes board placement choices. The through-hole mount (THM) versions feature an "air-flow" support-leg design that facilitates soldering and quick battery insertion. The surface-mount (SMT) versions feature a "flow-hole" solder tail design for increased solder joint strength and are available on tape-and-reel.

These holders are manufactured from UL rated 94 V-0 materials. Contacts are made of high-quality spring steel to assure reliable connections and allow contact resistance. Each contact is clearly marked with its polarity to assure proper battery insertion.

Lightweight and rugged, these PCB coin cell holders offer a uniquely designed notched battery slot that assures quick and easy insertion and replacement of all major battery manufacturers' lithium coin cells.

Features and Benefits of Coin/button Cell-Holders Battery Holders

Low profile for high-density packaging

Reliable spring-tension contacts assure low-contact resistance

Retains battery securely to withstand shock and vibration

Rugged construction, light weight

Unique notched battery slot assures quick and easy battery insertion and replacement

Compatible with vacuum and mechanical pick and place systems

Base material UL rated 94 V-0. Impervious to most industrial solvents

THM "air-flow" design pattern enhances air circulation around battery

SMT "flow-hole" solder tail design for increased solder joint strength

Clearly marked polarities to help guard against improper insertion

Unique Coin Cell Battery Holders

Antenk has pioneered a large number of new styles of coin cell battery holders to suit the various needs of the electronics industry. Our Verticals are an excellent way to save space on a crowded board, while our Minis can save almost 3 mm in height above the PCB over traditional coin cell battery holders. Gliders are an excellent upgrade over coin cell retainers, offering more reliable connections while also having simple, tool-less battery replacements. Our newest technology is Snap Dragon, which adds a snapping cover to the traditional style of coin cell battery holders for increased reliability.

Coin Cell Battery Holders by Size of Cell

191 | 335 | A76 | CR1025 | CR1216 | CR1220 | CR1225 | CR1620 | CR1632 | CR2016 | CR2025 | CR2032 | CR2320 | CR2325 | CR2330 | CR2335 | CR2354 | CR2420 | CR2430 | CR2450 | CR2477 | CR3032 | Coin Cell | F3 iButton | F5 iButton | LR1120 | LR44 | ML414 | SR512SW | SR60 | V80H or CP1654 | iButton | BR1025 | BR1216 | BR1220 | BR1225 | BR1620 | BR1632 | BR2016 | BR2025 | BR2032 | BR2320 | BR2325 | BR2330 | BR2335 | BR2420 | BR2430 | BR2450 | BR2477 | BR3032 | Other Sizes

Coin/button Cell-Holders,Coin Cell Holder,Button Cell Holders,Lithium Button Cell Battery Holder,Lithium Coin Cell Battery Holder

ShenZhen Antenk Electronics Co,Ltd , https://www.antenkwire.com